A Fusion Approach for Efficient Human Skin Detection

Abstract: A reliable human skin detection method that is adaptable to different human skin colors and illumination conditions is essential for better human skin segmentation. Even though different human skin-color detection solutions have been successfully applied, they are prone to false skin detection and are not able to cope with the variety of human skin colors across different ethnic. Moreover, existing methods require high computational cost. In this paper, we propose a novel human skin detection approach that combines a smoothed 2-D histogram and Gaussian model, for automatic human skin detection in color image(s). In our approach, an eye detector is used to refine the skin model for a specific person. The proposed approach reduces computational costs as no training is required, and it improves the accuracy of skin detection despite wide variation in ethnicity and illumination. To the best of our knowledge, this is the first method to employ fusion strategy for this purpose. Qualitative and quantitative results on three standard public datasets and a comparison with state-of-the-art methods have shown the effectiveness and robustness of the proposed approach.

Journal Paper: IEEE Trans. on Industrial Informatics, vol. 8(1), pp. 138-147, 2012 (T-II 2012)

A Fusion Approach for Efficient Human Skin Detection

Wei Ren Tan, Chee Seng Chan, Pratheepan Yogarajah and Joan Condell

Dataset and Code:

A new dataset, namely as the Pratheepan dataset downloaded randomly from Google for human skin detection research. These images are captured with a range of different cameras using different colour enhancement and under different illuminations.

Details and Download ...Paper Highlights:

- A novel human skin detection approach that combines a smoothed 2-D histogram and Gaussian model, for automatic human skin detection in color image(s).

- It reduces computational costs as no training is required, as well as improves the accuracy of skin detection despite wide variation in ethnicity and illumination.

- A new dataset - the Pratheepan Dataset is introduced with groundtruth.

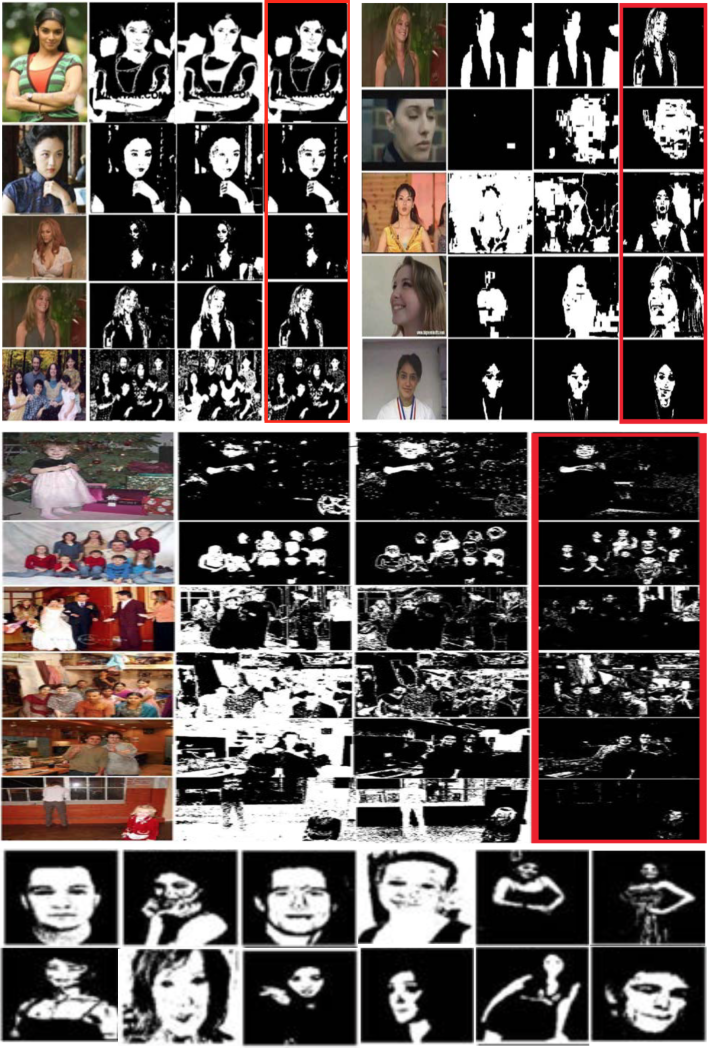

Example Results: For each column, from left to right: original image, static threshold method, dynamic threshold method, and the proposed method. RED box highlights results performed by our proposed method.

Acknowledgement

This research is based upon work supported by the High Impact Research Chancellory Grant UM.C/625/1/HIR/037, J0000073579 from the University of Malaya. Any opinions, findings, and conclusions or recommendations expressed in this material are those of the author(s) and do not necessarily reflect the views of the University of Malaya.